摘要:本文描述了如何在Linux操作系统下(Centos 6.5)安装NVIDIA GeForce GTX 1080显卡驱动以及CUDA工具包。内容包括:显卡驱动的下载、安装与验证;CUDA驱动的下载、安装,实例的安装、编译与验证。

一. 软硬件与操作系统

操作系统:Centos 6.5 (Linux/X86_64)

显卡:NVIDIA GeForce GTX1080

上网:CUDA安装要求机器能够链接网网络,以便运行yum命令

注意:安装前请确认自己的操作系统是否兼容、软件环境是否已经准备好。具体方法见:Installation Guide for Linux

二. NVIDIA显卡驱动的安装

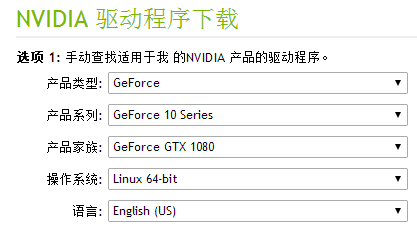

1. 下载NVIDIA显卡驱动

驱动下载地址:http://www.nvidia.cn/Download

图1. GTX 1080驱动下载界面

按图1填好参数,点击搜索可跳转到下载界面,下载即可。本例中,下载得到的安装文件为:NVIDIA-Linux-x86_64-384.59.run.

2. 安装NVIDIA显卡驱动

- 关闭X-服务却换为纯文本界面并删除X锁定文件

- 安装驱动

- 启用X-服务

[gkxiao@master work]$ init 3

[gkxiao@master work]$ rm -fr /tmp/X*

详细过程参见NVIDIA安装指南:http://www.nvidia.com/object/linux_readme_install.html

总的来说就是在执行安装文件:

[gkxiao@master work]$ sh NVIDIA-Linux-x86_64-384.59.run

按提示选择选项完成安装。

[gkxiao@master work]$ init 5

3. 显卡驱动的安装确认

1.检查是否能够正确识别硬件

在本机上,显卡是PCI设备,因此检查PCI设备:

[gkxiao@master gpu]$ lspci | grep -i vga

81:00.0 VGA compatible controller: NVIDIA Corporation GP104 [GeForce GTX 1080] (rev a1)

上述结果出现GeForce GTX 1080字样,说明硬件被正确识别,安装成功。

2. 更新PCI信息

如果PCI信息里没有出现GeForce GTX 1080,先尝试更新PCI信息后再尝试检查PCI设备。

用ROOT更新PCI信息:

[root@master gpu]# update-pciids

三. 安装CUDA

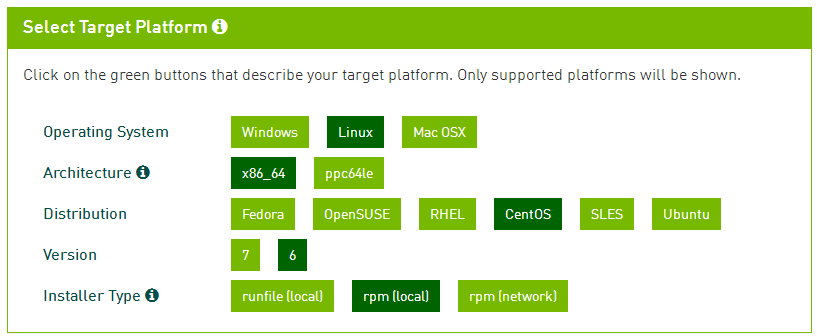

1. CUDA下载

CUDA下载地址:https://developer.nvidia.com/cuda-downloads

图2. CUDA下载

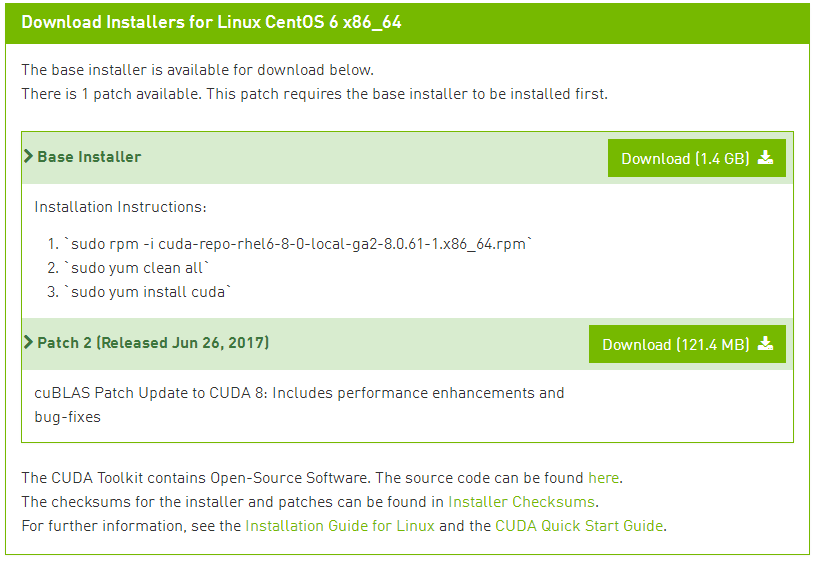

2. CUDA安装

详细的安装过程、安装前准备:Installation Guide for Linux

下载页面的也提供了简略的安装步骤(图3)。

图3. CUDA安装

3. CUDA的安装确认

3.1 查看驱动器版本

[gkxiao@master ~]$ cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 375.26 Thu Dec 8 18:36:43 PST 2016

GCC version: gcc version 4.4.7 20120313 (Red Hat 4.4.7-18) (GCC)

3.2 安装、编译CUDA自带的例子

先安装CUDA的example, 格式如下:

$cuda-install-samples-8.0.sh <目录>

比如安装于当前目录:

[gkxiao@master work]$ cuda-install-samples-8.0.sh .

安装完毕,会在当前目录下生成一个新的目录:NVIDIA_CUDA-8.0_Samples

切换到该目录:

[gkxiao@master work]$ cd NVIDIA_CUDA-8.0_Samples

编译实例:

[gkxiao@master NVIDIA_CUDA-8.0_Samples]$ make

3.3 CUDA设备验证

用CUDA例子自带的程序deviceQuery来验证安装结果:

[gkxiao@master NVIDIA_CUDA-8.0_Samples]$ 1_Utilities/deviceQuery/deviceQuery

出现类似于下面的信息:

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: “GeForce GTX 1080”

CUDA Driver Version / Runtime Version 8.0 / 8.0

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 8113 MBytes (8507162624 bytes)

(20) Multiprocessors, (128) CUDA Cores/MP: 2560 CUDA Cores

GPU Max Clock rate: 1734 MHz (1.73 GHz)

Memory Clock rate: 5005 Mhz

Memory Bus Width: 256-bit

L2 Cache Size: 2097152 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 129 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 8.0, CUDA Runtime Version = 8.0, NumDevs = 1, Device0 = GeForce GTX 1080

Result = PASS

验证通过,可以开始正常使用CUDA进行计算。

再试试CUDA编译器吧:

[gkxiao@master NVIDIA_CUDA-8.0_Samples]$

结果如下:

[gkxiao@master python]$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2016 NVIDIA Corporation

Built on Tue_Jan_10_13:22:03_CST_2017

Cuda compilation tools, release 8.0, V8.0.61

四. CUDA环境变量的设定

使用CUDA需要加载环境变量:

export PATH=$PATH:/usr/local/cuda/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda/lib64

五. 深度学习

深度学习网络的用户需要继续安装cuDNN库,下载与安装指南见:

https://developer.nvidia.com/rdp/cudnn-download

六. 提交了一个作业后,怎么知道GPU用上了呢?

还是用nvidia-smi命令,比如我正在跑一个tensorflow的训练,此时键入nvidia-smi,会有如下输出:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | +-----------------------------------------------------------------------------+ | NVIDIA-SMI 410.48 Driver Version: 410.48 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce GTX 1080 Off | 00000000:81:00.0 Off | N/A | | 48% 74C P2 86W / 180W | 1097MiB / 8119MiB | 48% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | 0 24932 C ...c/apps/miniconda3/envs/rdkit/bin/python 1087MiB | +-----------------------------------------------------------------------------+ |

最后一行显示了全部信息:GPU的设备号,作业号,程序,以及内存等。